Tech

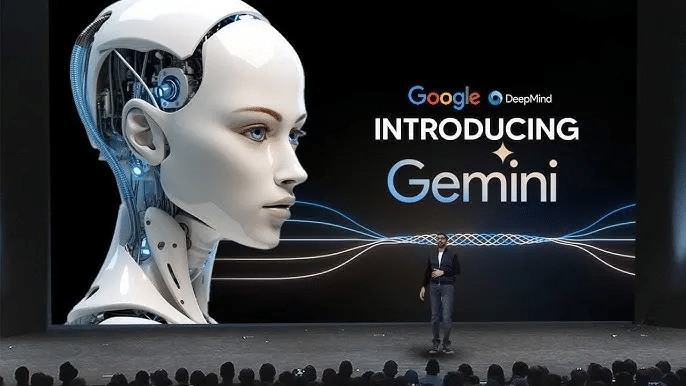

What is Gemini? Everything you need to know about Google’s latest AI model

Google’s most recent generative AI model, Gemini, was just released. The well-known tech corporation regards Gemini as the most sophisticated and adaptable AI model. Google has said that it will expand and improve its large language model (LLM) in the next year. Because the LLM is multimodal, it can understand several data types, including text, audio, pictures, and video.

Google Gemini: What is it?

The most recent large language model (LLM), Google Gemini, created by Google AI’s DeepMind, has sophisticated multimodal processing skills. It can comprehend, work with, and combine many kinds of data, such as text, code, audio, pictures, and videos.

This potent AI has three variants: Ultra, Pro, and Nano. Each version is intended to tackle a certain task’s level of complexity. Gemini has outperformed benchmarks and current industry norms. Its versatility across platforms and adherence to moral AI standards—which have been put through rigorous safety and bias testing—set it apart. Google intends to include Gemini in its offerings, available via Google Cloud Vertex AI and Google AI Studio. Notably, the Gemini Pro edition is perfect for various jobs and is offered for free.

How is Google Gemini used?

How you use Google Gemini depends on the integrated product and version. With Google Bard, for instance, users can submit questions and get answers on everything from weather predictions to poetry writing to coding help—all while being protected from offensive material.

Gemini Nano connects with Gboard for Pixel 8 Pro users, offering recommended responses in messaging applications like WhatsApp. Moreover, Nano may summarize offline recorded talks in the Recorder app.

Although Gemini Ultra’s functionality is unknown, it seems designed for complex activities and may be aimed at academics and business users. Its projected inclusion with Google’s chatbot, Bard Advanced, promises further exploration of opportunities when it is released.

GPT 4 vs Google Gemini: What’s the difference?

With its remarkable multimodal processing powers, Google Gemini excels at managing text, code, audio, photos, and movies. Its ability to integrate and manipulate many kinds of data allows for flexible interactions across several modalities, which is its main strength. Conversely, GPT models like as GPT-3 and maybe GPT-4 do very well in natural language tasks, but they may not have Gemini’s broad capabilities to handle a variety of data formats outside of text. Gemini is unique in that it can handle jobs with a variety of data formats because to its skill with multimodal inputs, while GPT models are mostly used for text-based tasks.

Google displayed the results of eight benchmarks that included text, with Gemini coming out on top in seven. Google claims that Gemini won every single one of the ten multimodal benchmarks.

Though it’s not quite that simple, it would appear to suggest that Gemini is the better method. Gemini is effectively catching up to a nine-month-old #AI tool, since GPT-4 was released in March 2023. It’s difficult to determine which tool is now superior since we don’t know how powerful OpenAI’s next GPT version will be.

Furthermore, Google limited the competition for Gemini Ultra to GPT-4. This indicates that although the current state of Gemini Pro and Nano’s ability to compete with GPT-4 is unknown, OpenAI’s model most likely outperforms Gemini Pro and Nano given the often narrow margins separating GPT-4 and Gemini Ultra.

In summary

With its multimodal proficiency, Google Gemini proves to be a capable multitasker in a future where artificial intelligence fuels human interactions. Although it leads in most benchmarks, the competition with GPT-4 is still close, making the victor of the race still to be announced. The competition for dominance between the two systems is still ongoing as they develop, indicating a bright future for AI research.

Related VOR News:

Apple Announces Its Annual Developers Conference Is Set For June 10