NEW YORK — Deepfake AI imaging may be used to make art, try on garments in virtual fitting rooms, and assist in the design of advertising campaigns.

However, experts are concerned that the darker side of the freely accessible techniques would exacerbate something that predominantly impacts women: nonconsensual deepfake pornography.

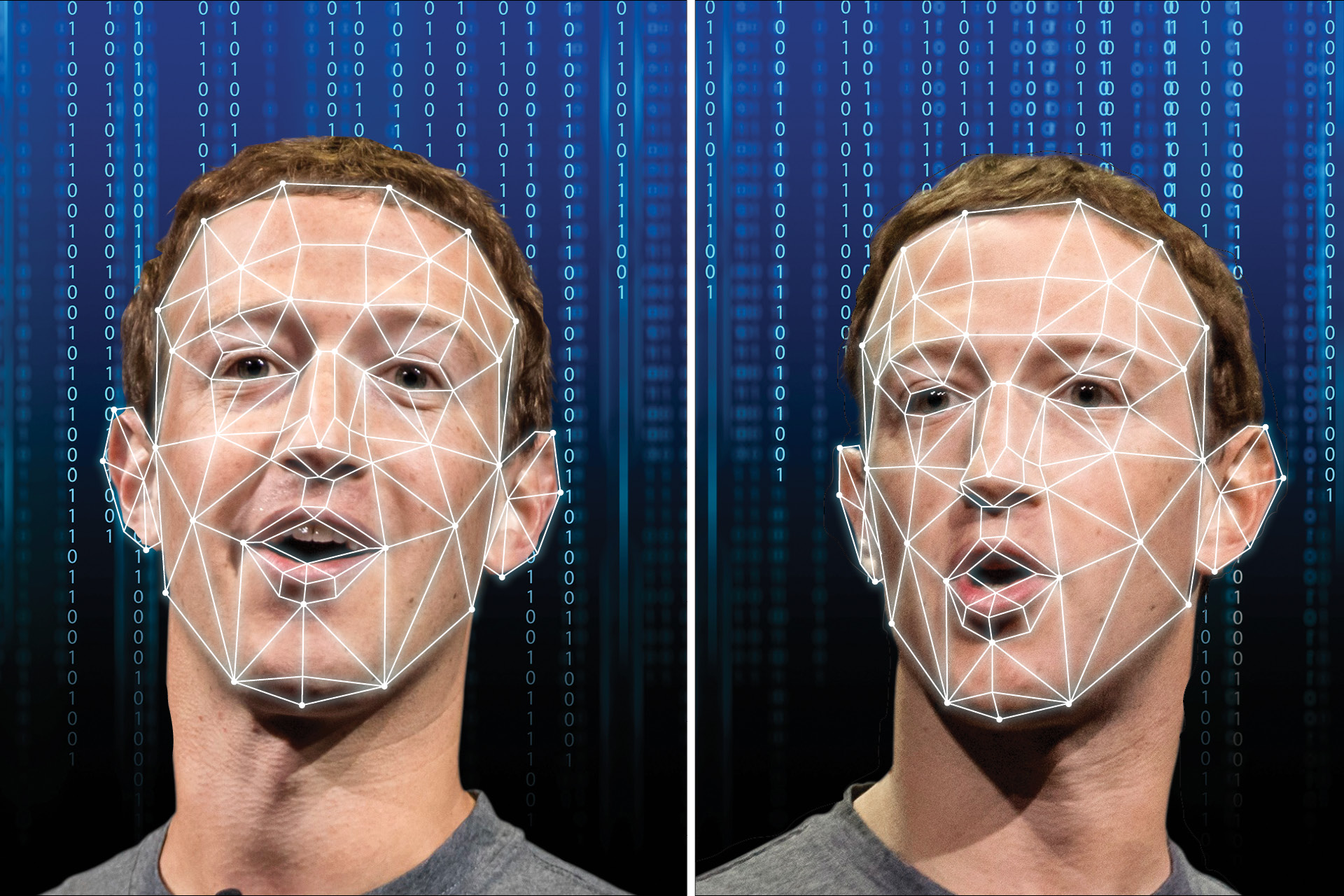

Deepfakes are films and images made or changed digitally using artificial intelligence or machine learning. Porn generated with the technology became popular several years ago when a Reddit user published films of female celebrities’ faces on the shoulders of porn actors.

Deepfake producers have since distributed similar movies and images aimed at online influencers, journalists, and anyone with a public presence. Thousands of videos can be found on a variety of websites. And some have allowed users to generate their photos, effectively allowing anyone to turn anyone into a sexual fantasy without their knowledge or to use the technology to punish former partners.

Experts claim the problem developed as it got simpler to create complex and visually appealing deepfakes. They also believe that developing generative AI tools, trained on billions of images from the internet and spitting out unique material utilizing current data, would exacerbate the problem.

“The reality is that technology will continue to proliferate, develop, and become sort of as easy as pushing a button,” said Adam Dodge, founder of EndTAB, a company that gives training on technology-enabled abuse. “And as long as this happens, people will undoubtedly… continue to misuse that technology to harm others, primarily through online sexual violence, deep fake pornography, and fake nude images.”

Noelle Martin of Perth, Australia, has firsthand knowledge of this fact. The 28-year-old discovered deep fake porn of herself ten years ago when searching for an image of herself on Google. Martin claims she has no idea who made the phony photographs or videos of her participating in sexual intercourse that she later discovered. She believes someone doctored a photo she shared on her social media page or elsewhere into porn.

Deepfake producers have since distributed similar movies and images aimed at online influencers.

Martin, terrified, contacted various websites for several years in an attempt to have the photographs removed. Some people have yet to answer. Others took it down, but she quickly restored it.

“You cannot win,” Martin declared. “This is something that will always be there.” It’s as if it’s permanently destroyed you.” deepfake

She claimed that the more she spoke up, the worse the situation became. Some even told her how she dressed and posted photographs on social media led to the harassment, blaming her rather than the creators.

Martin then shifted her focus to legislation, campaigning for a national law in Australia that would pay firms 555,000 Australian dollars ($370,706) if they failed to comply with removal demands for such content from online safety regulators.

However, controlling the internet is nearly impossible when countries have their own rules for content created halfway around the world. Martin, an attorney and legal scholar at the University of Western Australia, feels the problem must be addressed through a worldwide approach.

Meanwhile, other AI models claim to be restricting access to obscene imagery.

OpenAI eliminated explicit content from data used to train the image-generating tool DALL-E, limiting users’ ability to make such types of images. In addition, the corporation filters requests and claims to prevent people from building AI representations of celebrities and prominent politicians. Another model, Midjourney, prohibits the usage of specific keywords and encourages users to report bad photographs to administrators.

Meanwhile, in November, the startup Stability AI released an upgrade that removed the ability to make explicit images using their image generator Stable Diffusion. These changes were made in response to allegations that some users were using the technology to create celebrity-inspired nude photos.

According to Motez Bishara, a spokeswoman at Stability AI, the filter detects nudity using keywords and other approaches, such as picture recognition and produces a blurred image. However, because the corporation distributes its code to the public, individuals can modify the software and generate whatever they want. According to Bishara, the license for Stability AI “extends to third-party applications built on Stable Diffusion” and explicitly prohibits “any misuse for illegal or immoral purposes.”

Some social media businesses have also tightened their policies to protect their platforms from unwanted content.

TikTok announced last month that all deepfakes or edited content depicting realistic situations must be labeled as fake or altered in some way and that deepfakes of private figures and young people are no longer permitted. Previously, the business prohibited sexually explicit content and deepfakes, which mislead users about real-world events and cause harm.

Twitch also recently amended its standards regarding graphic deep fake photos after a popular streamer named Atrioc was caught with a deep fake porn website open in his browser during a live stream in late January. The website displayed bogus photographs of Twitch streamers.

Twitch already outlawed explicit deepfakes, but revealing a glimpse of such content — even if done to express anger — “will be removed and will result in an enforcement,” the firm warned in a blog post. Intentionally promoting, making, or sharing the material will result in an immediate suspension.

The identical app that Google and Apple banned had been running advertisements on Meta’s platform.

Other companies have attempted to prohibit deepfakes from their platforms, although doing so requires vigilance.

Apple and Google recently announced the removal of an app from their app stores that were displaying sexually provocative deep fake videos of actresses to advertise the product. Deepfake porn research is scarce, but one analysis published in 2019 by the AI startup DeepTrace Labs discovered that it was almost totally weaponized against women, with Western actors being the most targeted, followed by South Korean K-pop singers.

The identical app that Google and Apple banned had been running advertisements on Meta’s platform, which includes Facebook, Instagram, and Messenger. According to Meta spokesperson Dani Lever, the company’s policy forbids both AI-generated and non-AI adult content, and it has barred the app’s page from advertising on its platforms.

In February, Meta and adult sites such as OnlyFans and Pornhub began participating in Take It Down, an online program allowing teens to report obscene photographs and videos of themselves online. The reporting service accepts both ordinary photographs and AI-generated content, which has been a significant source of concern for child protection organizations.

“When people ask our senior leadership what the boulders coming down the hill are that we’re worried about, we tell them that they’re coming down the hill.” The first is end-to-end encryption and its implications for kid safety. The second is artificial intelligence, notably deepfakes,” said Gavin Portnoy, a spokesperson for the National Centre for Missing and Exploited Children, which maintains the Take It Down service.

“We have not… yet been able to respond directly to it,” Portnoy said.

SOURCE – (AP)