Claims circulating online in India recently misstated details about voting, claimed without evidence that the election would be manipulated, and urged for violence against India’s Muslims.

Researchers who study misinformation and hate speech in India say digital companies’ lax enforcement of their own regulations has created ideal conditions for damaging content that might alter public opinion, incite violence, and leave millions of voters unsure what to trust.

“A non-discerning user or regular user has no idea whether it’s someone, an individual sharing his or her thoughts on the other end, or is it a bot?” Rekha Singh, a 49-year-old voter, told the Associated Press. Singh expressed her concern that social media algorithms affect voters’ perceptions of truth. “So you are biased without even realizing it,” she went on to say.

In a year filled with major elections, India’s sweeping vote stands out. The world’s most populous country speaks dozens of languages and has the highest number of WhatsApp users and YouTube subscribers. Nearly 1 billion citizens are eligible to vote in the election, which will take place through June.

Google and Meta, the owners of Facebook, WhatsApp, and Instagram, say they are striving to prevent false or hateful content while assisting voters in finding credible sources. However, experts who have long followed disinformation in India believe their assurances are hollow after years of failed enforcement and “cookie-cutter” techniques that ignore India’s linguistic, religious, geographic, and cultural diversity.

According to disinformation experts that specialize in India, given the country’s size and importance to social media businesses, greater attention is likely.

“The platforms get money from this. Ritumbra Manuvie, a law professor at the University of Groningen in the Netherlands, stated, “They benefit from it, and the entire country pays the price.” Manuvie is the leader of The London Story, an Indian diaspora group that staged a protest outside Meta’s London offices last month.

The group and another organization, India Civil Watch International, discovered that Meta allowed political advertisements and posts that contained anti-Muslim hate speech, Hindu nationalist narratives, misogynistic posts about female candidates, and ads encouraging violence against political opponents.

The advertisements were viewed over 65 million times in 90 days earlier this year. They collectively cost more than $1 million.

Meta defends its work on global elections and disputes the findings of the India study, noting that it has expanded its collaboration with independent fact-checking organizations ahead of the election and has employees around the world ready to act if its platforms are used to spread misinformation. Meta’s president of worldwide affairs, Nick Clegg, stated about India’s election, “It’s a huge, huge test for us.”

YouTube in India

“We have months and months of preparation in India,” he told the Associated Press in a recent interview. “Our teams work around the clock. We have fact checkers in India who speak several languages. “We have a 24-hour escalation system.”

Experts think YouTube is another significant disinformation source in India. To see how well the video-sharing platform enforced its own rules, researchers from the organizations Global Witness and Access Now constructed 48 fake ads in English, Hindi, and Telugu that contained inaccurate voting information or calls to violence.

One stated India changed the voting age to 21, although it remains at 18, and another claimed women could vote via text message, which they cannot. A third advocated for the use of force at polling locations.

When Global Witness submitted the commercials to YouTube for approval, the answer was unsatisfactory, according to Henry Peck, an investigator with Global Witness.

“YouTube didn’t act on any of them,” Peck explained, instead approving the commercials for release.

Google, YouTube’s owner, rejected the study and stated that it had several procedures in place to detect ads that breach its policies. Global Witness said the ads were removed before they could be identified and prohibited.

“Our policies explicitly prohibit ads making demonstrably false claims that could undermine participation or trust in an election, which we enforce in several Indian languages,” the company said in a statement. The corporation also mentioned its relationships with fact-checking organizations.

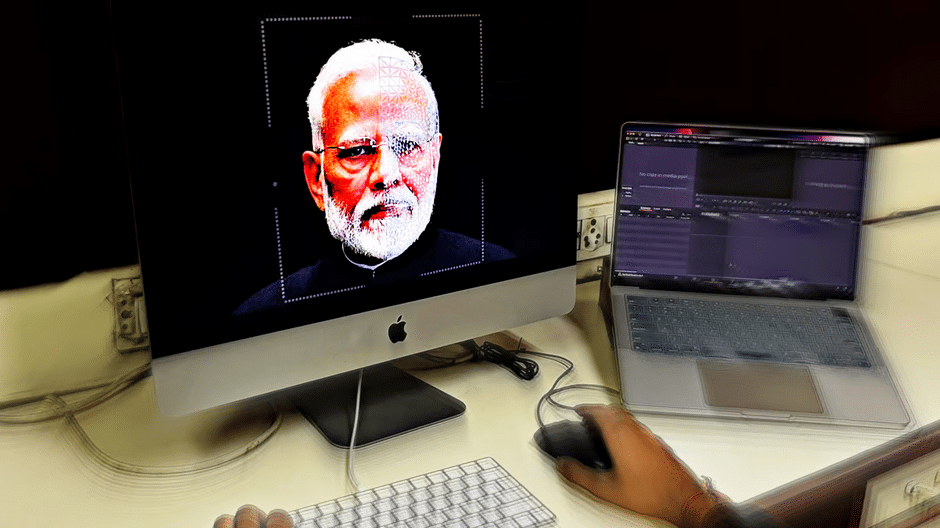

AI deepfakes

AI is this year’s newest threat, as technological advancements make it easier than ever to create lifelike images, video, and voice. AI deepfakes are appearing in elections around the world, from Moldova to Bangladesh.

Senthil Nayagam, founder of Muonium AI, feels there is an increasing market for deepfakes, particularly among politicians. In the run-up to the election, he had several questions about creating political videos with AI. “There’s a market for this, no doubt,” he told me.

Some of the fakes produced by Nayagam include deceased politicians and are not intended to be taken seriously, while other deepfakes circulating online have the potential to mislead voters. Modi has underlined the threat.

“We need to educate people about artificial intelligence and deepfakes, how it works, what it can do,” he stated.

India’s Information and Technology Ministry has asked social media companies to eliminate disinformation, including deepfakes. However, experts argue the lack of specific regulation or law focusing on AI and deepfakes makes it more difficult to combat, leaving it up to voters to decide what is true and what is fiction.

Ankita Jasra, 18, a first-time voter, says these ambiguities make it difficult to know what to believe.

“If I don’t know what is being said is true, I don’t think I can trust in the people that are governing my country,” she went on to say.

Source: The Associated Press